Advertisement

When it comes to artificial intelligence, Google doesn’t just show up—they usually change the conversation. And with their Gemini models, that’s exactly what’s happening. You’ve probably heard the name pop up here and there, but what do these models actually do? Why are there so many of them? And how are they different from the usual AI tools that are already out there?

Let's break it down without buzzwords or any of that flashy language—just a simple look at the seven Gemini models that are worth knowing.

This is where it all began. Gemini 1 is Google's foundational large language model, and while it doesn’t try to impress with gimmicks, it’s the reason the rest exist. It was trained across a blend of text, code, images, and audio—meaning it doesn’t just understand words; it’s built to handle multiple types of content in one go.

What sets Gemini 1 apart is its multi-modal approach. It wasn't stitched together as an afterthought—it was built to work with different formats from the beginning. So, if you're working with images and code or audio and text, it doesn't treat them as separate things. It gets the context and handles it all like it's one big conversation.

Gemini Pro is the middle ground. Think of it as the model that's good at everything without being too specialized. It's smaller and faster than the Ultra version, but it still brings solid reasoning skills and smooth performance for most tasks.

It’s often used in tools like Bard and Google Workspace features (like Gmail's writing suggestions or Docs assistance), and for a good reason—it’s quick, accurate, and doesn’t slow down when things get complex. Most people don’t even realize they're using it, which honestly says a lot. If a tool works well enough to blend into the background, it’s probably doing its job right.

This one is the top-tier model, made for high-level reasoning, advanced problem-solving, and complicated tasks that the average model might trip over. Gemini Ultra was designed with a sharper focus on logic, math, and code-based queries—so it's not just about sounding human; it's about getting answers right.

It’s not something you’ll find running every mobile app—it’s built for researchers, enterprise tools, and more advanced use cases where detail actually matters. When you hear people comparing Gemini to GPT-4, they’re usually talking about Ultra.

Gemini Nano is small—but that doesn't mean basic. It was designed for on-device tasks, meaning it can run directly on your phone without needing to connect to the cloud every time. Pixel 8 Pro was one of the first phones to ship with it, and that's just the start.

Why does this matter? Well, local models like Nano mean faster response times, fewer privacy concerns, and smarter features that work even without a signal. Whether it's summarizing notifications or improving speech recognition, Nano is designed to stay light without losing accuracy. It's the AI model that just quietly does its job behind the scenes.

As the name suggests, Gemini Flash is built for speed. It's a newer addition that is optimized for real-time responses and tasks where latency is an issue. It's the model you'd turn to if you're working on chatbots or interactive tools that need to reply fast—without sacrificing too much of the model’s intelligence.

This one works best when you’re building things that people expect to move fast—think customer service tools, voice assistants, or live translation features. It might not have all the nuance of Ultra, but it's not trying to. Flash is about getting solid answers quickly.

If you work in development or even just tinker with code, Gemini Code is where things get interesting. It was trained with a deeper understanding of programming languages, libraries, and frameworks, so it doesn’t just guess—it understands what your code is trying to do.

Code generation, debugging suggestions, translating code from one language to another—this model is designed to make those tasks easier. It’s meant to help, not take over, and most developers who use it don’t feel like they’re handing over control. They just get to move faster, especially on repetitive or annoying tasks.

This one handles visual data—images, diagrams, charts—and can pair it with text-based analysis. It’s useful in scenarios where a picture really is worth a thousand words, but you still need words to make sense of it.

For medical imaging, data visualization, and any task that requires understanding the layout or content of an image, Gemini Vision was made for those. It's trained to understand visual context and relate it back to text. So whether you're trying to extract meaning from a chart or figure out what a blueprint represents, this model gets it.

Each version of Gemini exists for a reason. Google didn’t just want a one-size-fits-all model—they’ve clearly thought about where each of these would make the most sense. Some are meant for your phone. Some belong in research labs. Some are built for creative work, while others stay laser-focused on speed or code.

The idea isn’t to flood the AI space with variations for the sake of variety. It’s to fit different needs. Whether you're writing an email, running a call center, building an app, or analyzing images—there’s a Gemini model that fits that job.

AI isn't just about building smarter machines anymore. It's about making the tools around us feel more natural and helpful—without being intrusive or overly technical. The Gemini models from Google are doing that quietly, not by being loud or overly complicated but by working where they're needed and staying out of the way when they're not.

So, if you're curious about what Google's up to in the AI space, these seven models are a good place to start. Each one has a role, and together, they're shaping the way we use smart tools every day—whether we notice it or not.

Advertisement

Confused about machine learning and neural networks? Learn the real difference in simple words — and discover when to use each one for your projects

Explore the top 8 AI travel planning tools that help organize, suggest, and create customized trip itineraries, making travel preparation simple and stress-free

Wondering how everything from friendships to cities are connected? Learn how network analysis reveals hidden patterns and makes complex systems easier to understand

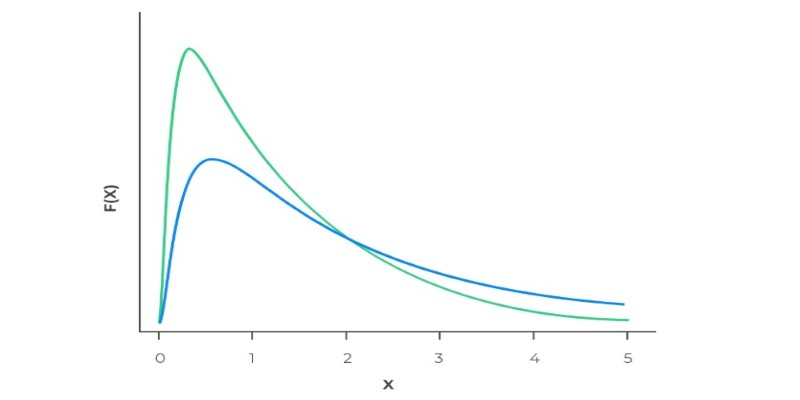

Ever wonder why real-world data often has long tails? Learn how the log-normal distribution helps explain growth, income differences, stock prices, and more

Explore how labeled data helps machines learn, recognize patterns, and make smarter decisions — from spotting cats in photos to detecting fraud. A beginner-friendly guide to the role of labels in machine learning

Wondering if there’s an easier way to add up numbers in Python? Learn how the sum() function makes coding faster, cleaner, and smarter

Wondering why your data feels slow and unreliable? Learn how to design ETL processes that keep your business running faster, smoother, and smarter

Working with rankings or ratings? Learn how ordinal data captures meaningful order without needing exact measurements, and why it matters in real decisions

Ever wonder how data models spot patterns? Learn how similarity and dissimilarity measures help compare objects, group data, and drive smarter decisions

Think of DDL commands as the blueprint behind every smart database. Learn how to use CREATE, ALTER, DROP, and more to design, adjust, and manage your SQL world with confidence and ease

Looking for smarter AI that understands both text and images together? Discover how Meta’s Chameleon model could reshape the future of multimodal technology

Think data science is just coding? See how math shapes predictions, decisions, and the models that power everything from apps to research labs