Advertisement

Every business, no matter its size, runs on information. And the way that information moves from one place to another can make or break how well a company functions. This is where ETL processes — Extract, Transform, Load — step in. ETL is what ensures that data from different systems comes together cleanly, clearly, and on time. But here's the catch: if not done right, ETL can quickly turn into a slow, costly mess that drags everything down.

So, how can you make ETL processes more efficient? Good question — and there’s a lot to unpack.

It’s easy to think of ETL as a simple backstage task that no one needs to worry about. After all, if the data shows up where it's supposed to, does it matter how it got there? Actually, yes — a lot.

Poorly designed ETL systems often lead to delays, missing information, and broken reports. And when people can’t trust the data they’re looking at, they hesitate. Decisions slow down. Opportunities slip away. On the other hand, a fast and clean ETL system ensures that everyone — from analysts to executives — gets the information they need without having to wonder if it’s correct.

Data integration done well keeps everything humming smoothly. It’s what allows a company to react to the market faster, spot trends earlier, and serve customers better. So, investing in efficient ETL strategies isn’t just a technical choice. It's a business choice.

Before diving into strategies, it helps to understand what separates a sluggish ETL process from a smooth one. There are a few key factors:

One of the biggest ETL pitfalls? Jumping in without fully understanding where the data is coming from. Data can be messy — fields that mean one thing in one system might mean something completely different in another. Or worse, a field might mean nothing at all.

Before setting up ETL workflows, it’s smart to spend time getting familiar with each source. What data is available? How is it structured? What quirks or gaps are lurking beneath the surface? Having a clear handle on all of this helps avoid surprises down the line.

Once you know your sources, the next step is deciding how to move that data into its new home. That’s where mapping rules come in. Think of them as a set of instructions: “Take this field from the old system, clean it up like this, and put it here in the new system.”

Without strong, well-documented mapping rules, ETL quickly turns chaotic. Teams waste time guessing how data should flow. Reports break without warning. And cleaning up the mess later is always harder than doing it right from the start.

Not all data should be moved exactly as it is. Sometimes, it needs to be cleaned, restructured, or even combined with other data before it's useful. That's the "Transform" part of ETL — and it's where a lot of the magic happens.

Keeping transformations as simple and efficient as possible pays off. Avoiding unnecessary complexity keeps processes faster and easier to maintain. After all, nobody wants to be the person stuck decoding a 300-line transformation script three years from now.

Now that we've got the basics down let's dig into what really helps make ETL processes faster and better:

Pulling every record from a system every single time is a fast track to frustration. Full loads are slow, heavy, and rough on both the source and destination systems. Whenever possible, it’s smarter to use incremental loads instead.

Incremental loads focus only on the data that has changed since the last ETL run. Maybe it’s new records, maybe it’s updated ones — either way, it’s a much smaller, more manageable chunk. This approach speeds up processing times, reduces errors, and keeps systems happier overall.

Why move data one table at a time when you could move ten? Parallel processing allows multiple pieces of data to be extracted, transformed, and loaded at the same time.

Of course, it needs to be done carefully — too much at once can overwhelm your systems. But when managed properly, it’s a powerful way to cut down ETL runtimes by a huge margin.

Sometimes, it makes sense to move data first and transform it later. But often, it’s faster to do some transformations at the source itself — before the data even leaves the original system.

This approach reduces the amount of data that needs to travel. Plus, it often lets you take advantage of the source system’s strengths — like fast, optimized queries. Less moving around equals faster ETL.

Just because an ETL job worked well six months ago doesn’t mean it’s still the best way to move data today. Systems change. Data volumes grow. What once was fast can slowly become a bottleneck.

Setting up regular ETL reviews helps spot these slowdowns early. Maybe it’s time to tweak a query. Maybe an index needs to be added. Or maybe a whole new approach would work better now. Staying proactive keeps things running smoothly without last-minute panic.

Even the best strategies can fall flat if a few common traps aren’t avoided:

Overloading transformations: Packing too much into a single ETL step can slow everything down and make troubleshooting a nightmare.

Ignoring error handling: When something goes wrong — and it will — you want clear, useful error messages. Otherwise, tracking down the problem turns into guesswork.

Poor resource management: ETL jobs shouldn’t hog all system resources and leave other processes gasping. Good scheduling and smart resource allocation make a big difference.

Lack of documentation: Without clear notes on what ETL processes are doing, fixing problems or making changes later becomes a frustrating guessing game.

A little extra care upfront saves a lot of pain later.

Efficient ETL isn’t about rushing through data moves as fast as possible. It’s about setting up systems that are smart, reliable, and built to last. Clear source understanding, strong mapping rules, smart transformations, and a few proven speed strategies make all the difference. By treating ETL as a core part of your data integration — not just a back-office task — you set yourself up for better decisions, faster reactions, and a much smoother ride overall. Good ETL doesn’t just move data. It moves businesses forward.

Advertisement

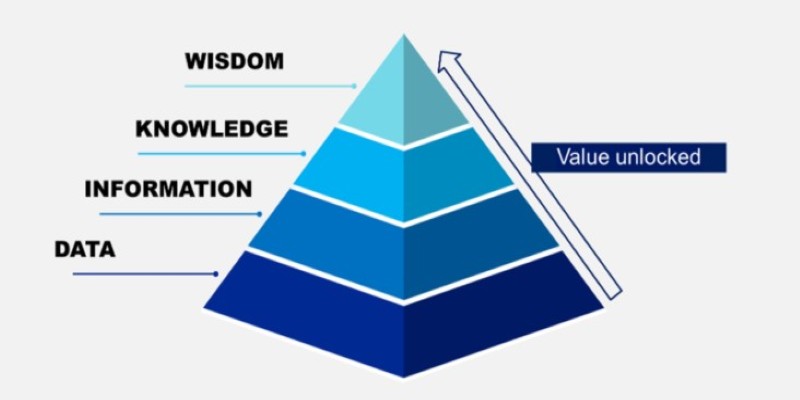

Ever wondered how facts turn into smart decisions? Learn how the DIKW Pyramid shows the journey from raw data to wisdom that shapes real choices

Wondering why your data feels slow and unreliable? Learn how to design ETL processes that keep your business running faster, smoother, and smarter

Looking for smarter AI that understands both text and images together? Discover how Meta’s Chameleon model could reshape the future of multimodal technology

Learn what stored procedures are in SQL, why they matter, and how to create one with easy examples. Save time, boost security, and simplify database tasks with stored procedures

Wondering how everything from friendships to cities are connected? Learn how network analysis reveals hidden patterns and makes complex systems easier to understand

Explore how labeled data helps machines learn, recognize patterns, and make smarter decisions — from spotting cats in photos to detecting fraud. A beginner-friendly guide to the role of labels in machine learning

Curious about Llama 3 vs. GPT-4? This simple guide compares their features, performance, and real-life uses so you can see which chatbot fits you best

Confused about whether to fine-tune your model or use Retrieval-Augmented Generation (RAG)? Learn how both methods work and which one suits your needs best

Understand the principles of Greedy Best-First Search (GBFS), see a clean Python implementation, and learn when this fast but risky algorithm is the right choice for your project

Confused about machine learning and neural networks? Learn the real difference in simple words — and discover when to use each one for your projects

Apple unveiled major AI features at WWDC 24, from smarter Siri and Apple Intelligence to Genmoji and ChatGPT integration. Here's every AI update coming to your Apple devices

The Dead Internet Theory claims much of the internet is now run by bots, not people. Find out what this theory says, how it works, and why it matters in today’s online world